UPM develops Agentic AI framework to complement DIGEST project’s mission in sustainable data engineering

In a compelling demonstration of academic innovation, Alejandro Martínez Esquivias, a student at the Universidad Politécnica de Madrid (UPM), has contributed to the development of a cutting-edge system titled «Agentic AI: An Exploratory and Functional Approach» as part of his final year thesis. This pioneering work explores the orchestration of modular multi-agent systems driven by Large Language Models (LLMs), with particular relevance to scientific and environmental reasoning—strikingly aligning with the goals of the DIGEST project.

DIGEST is a collaborative research initiative hosted at UPM’s School of Engineering and Systems in Telecommunications. Its mission is to advance sustainable data intelligence solutions by integrating artificial intelligence with domain-specific applications in environmental sciences and renewable energy.

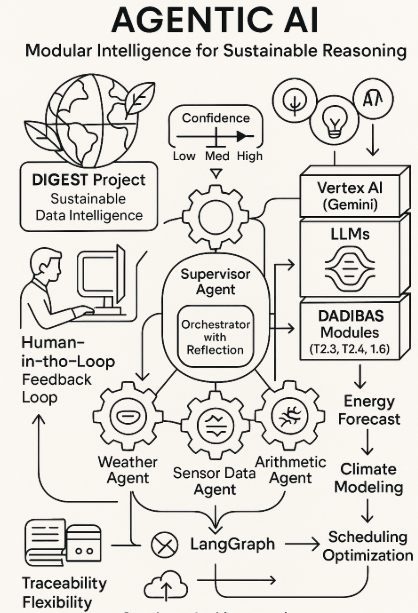

Agentic AI introduces a highly modular architecture powered by Google’s Gemini model through Vertex AI and orchestrated using LangChain and LangGraph frameworks. This system dynamically composes domain-specialized agents capable of handling complex queries across meteorology, sensor data analysis, arithmetic operations, and literature retrieval. What makes this framework particularly innovative is its emphasis on traceable reasoning pipelines and transparency—a shared priority with DIGEST’s focus on explainable AI in critical domains.

The project tested ten real-world scenarios, from simple weather queries to advanced multi-step reasoning tasks involving scientific literature. It demonstrated that autonomous segmentation of reasoning and cooperative agent orchestration can substantially improve the reliability of LLM-generated outputs. This is crucial in areas such as energy consumption forecasting or climate data modeling, where interpretability and traceability are vital—core research domains under DIGEST.

Moreover, the project’s foresight in incorporating a Human-in-the-Loop (HITL) mechanism, albeit not fully implemented, reflects a deep alignment with DIGEST’s human-centric approach to trustworthy AI systems. Both initiatives underscore the importance of balancing AI autonomy with human oversight to ensure safe deployment in socially impactful contexts.

The synergy between Agentic AI and DIGEST is evident in their shared commitment to scientific rigor, sustainable development, and scalable AI architectures. Agentic AI’s layered design—with specialized agents for databases, weather systems, bibliographic APIs, and numerical reasoning—can be seen as a microcosm of DIGEST’s larger vision: intelligent systems that not only compute, but also understand and explain their decisions in high-stakes domains.

Agentic AI offers a prototype for reasoning systems that are flexible, explainable, and composable key qualities sought in DADIBAS WP2 for improving decision-making and predictive capabilities using advanced learning and orchestration techniques. Details are evident through:

- DADIBAS-T2.3 Microservice environment for project usage: Agentic AI’s modular agent architecture parallels the setup of a flexible microservices platform as proposed in this task

- DADIBAS-T2.4 DeepLearning, Transfer Learning and Contrastive Learning tools: The thesis’s LLM orchestration and experimentation with multi-agent reasoning reflects the design and comparison of different ML paradigms addressed here

- DADIBAS-T2.6 Decision Transformers in Scheduling Optimization: This task explores combining transformers and reinforcement learning with agent-based models—directly resonating with Agentic AI’s orchestrated reasoning and task delegation through LLM agents

As DIGEST continues to explore novel intersections between AI and sustainability, Agentic AI serves as a testament to the power of student-led innovation to inform, inspire, and accelerate academic research towards pressing global challenges.

New engagements are foressen based on the achievements, such as an «Reflective Agent Orchestrator with Confidence-Gated Human-in-the-Loop (HITL)». This pattern refines the existing supervisor by adding a layer of «meta-cognition» or reflection. Agents not only execute tasks but also evaluate and communicate a confidence level regarding their results or the validity of tool responses. The supervisor uses this information to make more nuanced decisions, including the activation of an HITL system.»

Some other more advanced developments are being planned, looking to extend flexibility and capabilities of such systems.